Our AI-generated future is going to be fantastic.

Archive link, so you don’t have to visit Substack: https://archive.is/hJIWk

Ew, substack…

Yeah sorry - edited OP and added archive link, so no one needs to hit substack servers.

Nice, thanks

Pardon my ignorance but I’m OOTL- why is substack a bad thing? ( I didn’t know of its existence before this post)

Substack says it will not remove or demonetize Nazi content

Quite a few organizations and lots of individuals are now starting to migratev away from it and if any of the money made by visiting goes to Nazis, many of us would prefer to avoid the place when possible.

They recently refused to remove pro-Nazi content from their site, claiming that doing so doesn’t make the problem go away so therefore there’s no point in trying.

For me, it’s the refusal to demontize them that’s particularly bad. I can understand free speech absolutists (in theory anyways, in practice you’ll find that they VERY rarely actually are and will happily censor people they disagree with like LGBTQIA+ or sex work content), but that doesn’t mean you need to actively be funding them!

This is a really good point. I’m sure they’re using a payment provider, so it might be worth it to go to them directly in the same way people have gone to advertisers to get Fox News hosts fired. The argument could be made that they’re funding domestic terrorism by white nationalists. The payment provider might be much less willing to take on that risk in the name of “free speech”.

Ah yes, the automated plagiarism disguising machines in action.

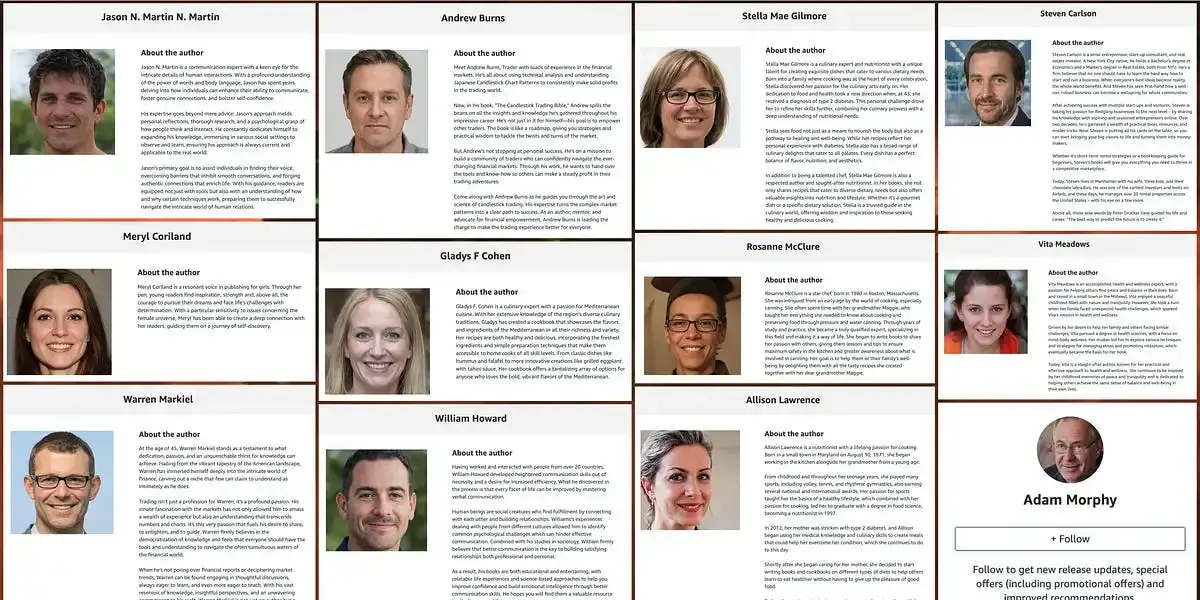

Amazon has been having problems with books written by LLMs for almost a year, and it doesn’t appear to do anything about it. For example:

AI Detection Startups Say Amazon Could Flag AI Books. It Doesn’t (Sep 2023)

A new nightmare for writers shows how AI deepfakes could upend the book industry—and Amazon isn’t helping (August 2023)

These are just two examples, you’ll find many more. But people keep buying there and support this business.

[Edit typo.]

Ah! Typical Amazon

From your second link…

The story comes from author Jane Friedman, a veteran writer and academic who woke up to find AI-generated books listed under her name on Amazon.

I don’t think AI is the problem here. It’s that I can write a book, claim George R. R. Martin is the author and Amazon won’t fact check me.

I mean, both are problems.

“Problems”… how much is Amazon’s cut?

I do almost all of my reading on a kindle - one of the ones with ads on the lock screen, and for months now all of the ads have been for this type of no-effort, low-quality, AI-generated garbage. Amazon clearly doesn’t give a fuck as long as they make money.

There’s ads on the Lock Screen now? Ugh.

They have sold two ‘tiers’ of kindles for awhile, the one with ads on the lock screen is discounted.

It’s not my favorite thing but iirc the lock screen is supposed to be the only place with ads.

Yes, and in fairness to them that’s how it has been. The ads are only for stuff sold on the kindle store too, so not like you’re seeing ads for hot singles in your area or some shit on your kindle. It’s only now that the store is flooded with this AI garbage that the ads have become annoying.

I believe you’re right. However, if memory serves, the ads did change occasionally, meaning the screen had to refresh, leading to increased battery usage.

Now? They’ve had the option almost since they first started selling them. It’s like $10 to remove them permanently. Honestly didn’t realize anyone would get the one with ads on purpose but hey, I didn’t think people would use Twitter when I first heard about it either.

I bought a few of their tablets with ads on purpose - and then just blocked the ads.

I just sent them a message asking them to disable the ads on the kindle I had recently purchased, and they did so for free, no root needed. That was a few years ago though, so I doubt they do that anymore…

Earlier this year I called them and let them know that some of the ads looked a little inappropriate for some of the children who use the device… They removed them no questions asked.

Well, if they didn’t care about being flooded with machine generated trash, they wouldn’t have set the limit to books you can self publish down to a mere three per day.

I don’t know how it is now, but the older ones you could root and disable the ads.

You don’t even need root, or at least you didn’t when I had mine. You just need to turn on developer mode, and then the Fire Toolkit app can do the rest.

Here’s a basically fully automated service where you can generate a shitty book for $200. You can even have it printed as a paperback for more useless waste or have it AI narrated as a shitty audiobook.

$200? I can use Llama on my computer for free.

How much time and power per page?

Then, it seems like Meta trained Llama with copyright protected books without permission, so the model might stop being free at any moment.

They’re not going to make me pay for something that’s already on my computer

Heh, we’ll see.

For starters, keep that copy safe, in case Meta has to pull down all the “illegally opensourced” copies of LLaMA. If the “authors” (publisher corporations) have their way, it will become illegal to run the model without paying them royalties, so Meta will only be able to offer it as a paid service. You might still be able pirate it though, if people are willing to share.

For the future, when your next computer comes with neuromorphic RAM capable of running those models a billion times faster… just hope it doesn’t also come with DRM checks built in to stop illegal models from being loaded (“RAM access error: unauthorized content detected”… doesn’t that sound like every author’s dream? 🙄)

For free? The larger models require a lot of hardware.

I could use the predictive text android uses and come out with as readable a novel as anything an LLM can produce. This scam only works because people aren’t reading what they’re buying.

If you already have the computer for other reasons, such as gaming, are you paying for it to use it for a LLM? The limiting factor isn’t raw power either, it’s VRAM size. A GTX 1080 with 8Gb is capable of running some models. But an RTX 3060 12Gb can be bought new for really cheap and is more than enough for most people’s use at home. Raw GPU power only helps with the time it takes, but even if it took 12-24 hours, well, do you want it fast or do you want it cheap?

There’s more details in this reddit thread, sorry for linking the hell site: https://old.reddit.com/r/LocalLLaMA/comments/12kclx2/what_are_the_most_important_factors_in_building_a/

Yeah, if you already have it then it’s not really an extra cost. But the smaller models perform less well and less reliably.

In order to write a book that’s convincing enough to fool at least some buyers, I wouldn’t expect a Llama2 7B to do the trick, based on what I see in my work (ML engineer). But even at work, I run Llama2 70B quantized at most, not the full size one. Full size unquantized requires 320 GPU vram, and that’s just quite expensive (even more so when you have to rent it from cloud providers).

Although if you already have a GPU that size at home, then of course you can run any LLM you like :)

Okay, now ask yourself, are these books convincing enough to fool anyone? Because it’s just as likely that company has lofty promises and uses a toaster to generate the content.

Fair enough. They only have to convince the self help books crowd 🙃

I keep waiting for Amazon to start generating AI-driven online profiles spouting Ai-driven selling points for AI-generated media.

It’s the AI version of “Flood the zone with shit” except the entire internet is The Zone.Two words: Camen Mola.

Amazon also sell faked AI products if you search for (bizarrely)…

“goes against OpenAI use policy”

Try that string and see what happens.

Those aren’t faked products. Those are shitty products that have a shitty attempt at giving them descriptions.

The reporter explains why they don’t seem to be real. Why do you think otherwise?