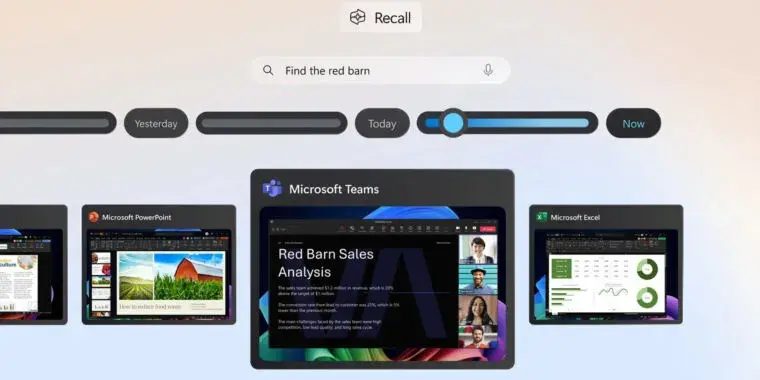

This is great, I can show all my 4k porn collection to my managers doing Teams screen sharing!

I read this as “40k porn” and was like…wtf mate.

Warhammer 40k Porn?

I wonder if the dudes in those giant armor suits with tank-sized guns are compensating for something… 🤔

That’s a wtf for you? You must be new to the internet then

Amateurs

Slaanesh.

You can do that anyway 👍

That closing quote is ominous:

“Recall is currently in preview status,” Microsoft says on its website. “During this phase, we will collect customer feedback, develop more controls for enterprise customers to manage and govern Recall data, and improve the overall experience for users.”

I read “so, yeah, we built in all the telemetry connections we swear we’ll never use … just for testing, ya know?”

more controls for enterprise customers to manage and govern Recall data

ahh ok so this is employee monitoring software

Probably more what MangoKangoroo and B0rax talked about, that enterprises can opt out of this telemetry, due to compliance or Intellectual Property protection.

So only the commoners get mandatory full-scale surveillance, Ehm I mean “ai enhancement”

Good thing I removed every Windows I had but one where I only game on lol.

In light of the recent forays by AI projects/products into the reason of coding assistants, from copilot to Devin, this reads to me as a sign that they’ve finally accepted that you can’t make an ai assistant that provides actual value from an LLM purely trained on text.

This is Microsoft copying Google’s captcha homework. We trained their OCR for gBooks, we trained their image recognition on traffic lights and buses and so signs.

Now we get to train their ai assistant on how to click around a windows OS.

yep! I didn’t pick up on any explicit link … but the coupling AI and recall is not coincidence. It’s serfdom.

What’s that got to do with AI?

Edit: Ah. Probably the search bar from the screenshot.

I’m curious whether the increasingly invasive telemetry of modern Windows will have legal implications surrounding patient privacy here in the US. I work IT in the healthcare field, and one of our key missions is HIPAA compliance. What, then, will be the impact if Microsoft starts storing more and more in-depth data offsite? Will keyboard entries into our EHR be tracked and stored in Microsoft’s servers? Will we subsequently be held liable if a breach at Microsoft causes this information to leak, or if Microsoft just straight-up starts selling it to advertisers? Windows is our one-and-only option for endpoint devices, so it’s not like we can just switch.

I genuinely don’t have the answers to these questions right now, but it may start to become a serious conversation for our department in the future if things continue at the trajectory they’re going at. Or, maybe I’m just old and paranoid and everything will be okie dokie.

Like most of Microsoft’s more odious features, this one can be turned off through GPO/Intune policy across an organization. As such, the liability will mostly fall on the organization to make sure it’s off. The privacy and security impacts will be felt by individuals and small businesses.

They claim that the data is only stored locally, so far. We’ll see, I guess.

Sadly a lot of the privacy switches are exclusive to enterprise and education users, but our endpoints are running Pro (we have our previous supervisor to thank for that). I guess I’ll hope this is one of the ones we can just toggle off without any fuss.

I guess it will be like it was before, that there is a different version of windows for these use cases. Like Windows LTSC.

I open windows and it starts recording: opens Plex, plays Mash for 13 hours straight, PC closed down.

Well, so, you use password generator, the password screenshot is saved.

This makes most password generators useless because they show the password for user feedback. You can turn this MS AI off, but I will have no idea if there was a bug.

deleted by creator

A lawsuit waiting to happen… someone needs to class action MS for systemic breaches of privacy. Think of all the critical infrastructure, government, medical, policing, etc. systems processing sensitive, private, and in some cases classified, information.

I mean, no thanks.

But they did this already, right? Their “Timeline” feature in Windows 10 recorded a log of your activities to display it in your Win+Tab menu screen. I switched it off immediately, but the point is this is a new approach to an old feature they have done in the past.

Everybody must have turned it off, though, because it hadn’t been present in Win 11 until now. It’s still a dumb idea.

I miss windows 7

THIS.

IS.

SPARTA!

Memes from the oldest of ages…

Rise up to the top of the pages!

What could go wrong? /s

NOPE!

You cannot pay me to use Windows 11.

Wish I had a choice, at work. Technically I can run Linux or MacOS, but I’d need to run a Windows VM for a few things anyway.

on your PC

*on Microsofts PC.

Our PC