So, while this is not exactly a typical “self-hosting” question as many users might not be using domains, I would be curious if anyone else has any experience with this.

I have NGinx Proxy Manager installed on a vps and a few docker instances that host various services (wordpress, a gitlab, etc etc) that I have bound to specific ports (wordpress to port 80, gitlab to port 3000, to give made up arbitrary examples.)

I also have a domain and a few subdomains registered as Type A resource records that look like:

[www.]somedomain[.com]

[gitlab.]somedomain[.com]

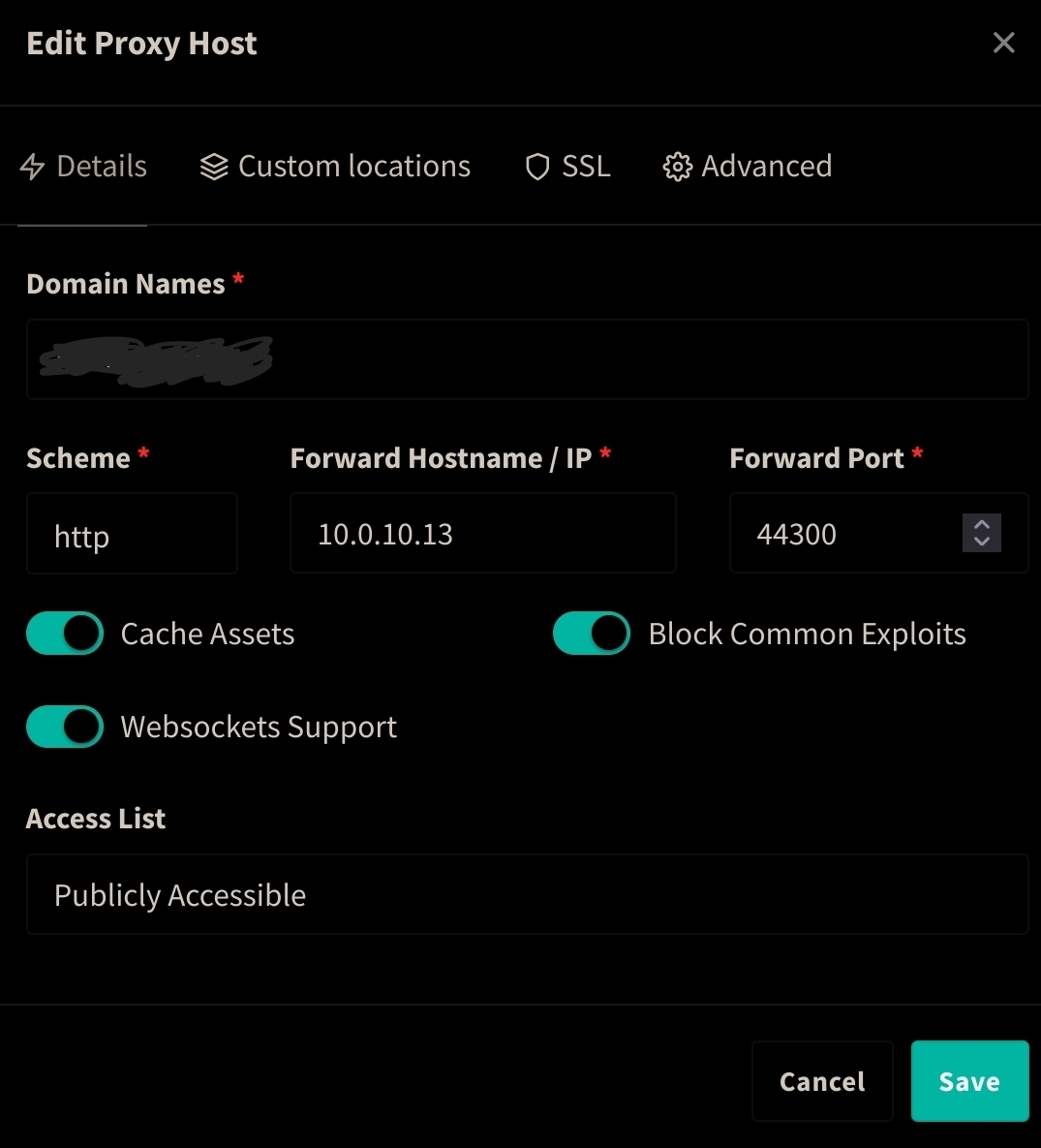

The essence of the question: When I go to NGinx Proxy Manager and register a “Proxy Host” for the gitlab subdomain, like:

Domain: gitlab.somedomain.com

Scheme: http

Forward Hostname: <IP ADDRESS HERE>

Forward Port: 3000 (AKA the port gitlab is hosted on)

This works, but it comes with the drawback that the port number is then exposed in the url bar like so:

gitlab.somedomain.com:3000

So is there some way to fix this on the NGINX proxy manager side of things? Or is this a case where I’m doing this completely wrong and someone with web-dev experience can help me see the light. While it’s not a huge hindrance to my use-case, it would still be nice to understand how this is supposed to work so that I can host more services myself that require domain names without having to shell out for isolated IPs. So if I hosted a lemmy or kbin, for example, I could actually configure it to use my subdomains correctly.

I’m a little confused, mine is setup in the same way you described I believe and accessing the subdomain without any additional port still forwards it correctly to the destination ip:port.

It sounds like maybe you have Nginx set up but you’re not using it. Nginx is normally listening on one port.

As an update, I think this was a side effect of how Wordpress / Gitlab was set up, where it was expecting it to be IP:PORT, which would force a redirect to the port specifically. Using the subdomain as the setting for web url seemed to resolve my problem. Thanks for the replies from everyone as all of the advice here is still really useful!

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I’ve seen in this thread:

Fewer Letters More Letters DNS Domain Name Service/System HTTP Hypertext Transfer Protocol, the Web IP Internet Protocol NAT Network Address Translation VPS Virtual Private Server (opposed to shared hosting) nginx Popular HTTP server

5 acronyms in this thread; the most compressed thread commented on today has 17 acronyms.

[Thread #198 for this sub, first seen 8th Oct 2023, 09:05] [FAQ] [Full list] [Contact] [Source code]

Edit: ignore everything, just realized it’s all running on a VPS. In that case, change WordPress off port 80. NPM needs 80 and 443.

~~Assuming you have port forwarding on your router set up with 80 and 443 being forwarded to the local IP of the machine hosting NPM…

Another problem you might run into is NAT Loopback, but it all depends on your router.

So you type lemmy.domain.com (no port), it goes to DNS which points it to your home IP, it goes back through your router and gets forwarded to NPM, and NPM does the handling of which IP:PORT to serve up.

I have adguard home doing all of my DNS so to get around the NAT loopback problem I have custom DNS rewrite rules. It’s very simple in my case: *.domain.com gets directed to the local IP of NPM.

When I’m outside my home network, NAT loopback is no longer a problem and things work as they should.

Sorry for rambling, just reciting some general overview stuff that I’ve learned along the way.~~

You should not be able to access port 3000 in your scenario. The fact you can suggests that you used

network_type: hostfor your gitlab container which has exposed it on the host’s interface and that your firewall is not filtering it.- Block everything on the VPS public interface except 443, 80 and 8081 which should be forwarded to the NPM container.

- All your containers should use network mode “bridge” unless they explicitly require another mode for pertinent reasons.But on a VPS there’s usually not going to be a reason to do that.

- In bridge mode you can expose ports inside the container to the host with the “ports:” directive but only NPM 80, 443 and 8081 (admin interface) should be exposed that way. For everything else it’s best to use a docker network instead and connect containers to the NPM container instead, since that’s the only one that really needs to access them.

How this works:

- Regular containers expose their ports only to the NPM container on the private docker network.

- 443 on the VPS external interface gets through the firewall and forwarded to 443 on the NPM container.

- NPM looks at the domain name and proxies the connection to the appropriate host/ip container and port as you configured it. Btw it’s easier to give containers hostnames and refer to them by name than to track docker bridge IPs.

- Port 80 behaves the same way but as soon as you get Let’s Encrypt certificates and get 443 going you can close it down (in both the VPS firewall and the NPM container port mapping).

- You should also set up a domain proxy forward to the NPM admin interface on 8081 at which point you can stop forwarding/mapping that one too.