I’m a retired Unix admin. It was my job from the early '90s until the mid '10s. I’ve kept somewhat current ever since by running various machines at home. So far I’ve managed to avoid using Docker at home even though I have a decent understanding of how it works - I stopped being a sysadmin in the mid '10s, I still worked for a technology company and did plenty of “interesting” reading and training.

It seems that more and more stuff that I want to run at home is being delivered as Docker-first and I have to really go out of my way to find a non-Docker install.

I’m thinking it’s no longer a fad and I should invest some time getting comfortable with it?

dude, im kinda you. i just jumped into docker over the summer… feel stupid not doing it sooner. there is just so much pre-created content, tutorials, you name it. its very mature.

i spent a weekend containering all my home services… totally worth it and easy as pi[hole] in a container!.

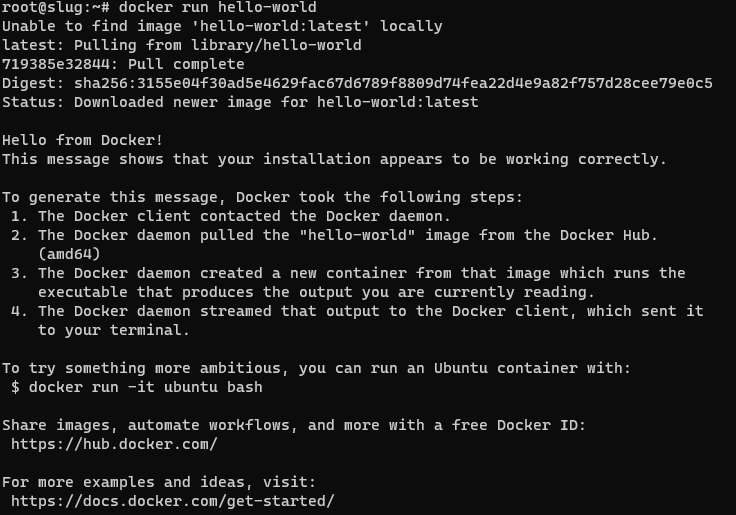

Well, that wasn’t a huge investment :-) I’m in…

I understand I’ve got LOTS to learn. I think I’ll start by installing something new that I’m looking at with docker and get comfortable with something my users (family…) are not yet relying on.

Forget docker run,

docker compose up -dis the command you need on a server. Get familiar with a UI, it makes your life much easier at the beginning: portainer or yacht in the browser, lazy-docker in the terminal.# docker compose up -d no configuration file provided: not foundlike just

docker runby itself, it’s not the full command, you need a compose file: https://docs.docker.com/engine/reference/commandline/compose/Basically it’s the same as docker run, but all the configuration is read from a file, not from stdin, more easily reproducible, you just have to store those files. The important is compose commands are very important for selfhosting, when your containers expected to run all the time.

Yeah, I get it now. Just the way I read it the first time it sounded like you were saying that was a complete command and it was going to do something “magic” for me :-)

you need to create a docker-compose.yml file. I tend to put everything in one dir per container so I just have to move the dir around somewhere else if I want to move that container to a different machine. Here’s an example I use for picard with examples of nfs mounts and local bind mounts with relative paths to the directory the docker-compose.yml is in. you basically just put this in a directory, create the local bind mount dirs in that same directory and adjust YOURPASS and the mounts/nfs shares and it will keep working everywhere you move the directory as long as it has docker and an available package in the architecture of the system.

`version: ‘3’ services: picard: image: mikenye/picard:latest container_name: picard environment: KEEP_APP_RUNNING: 1 VNC_PASSWORD: YOURPASS GROUP_ID: 100 USER_ID: 1000 TZ: “UTC” ports: - “5810:5800” volumes: - ./picard:/config:rw - dlbooks:/downloads:rw - cleanedaudiobooks:/cleaned:rw restart: always volumes: dlbooks: driver_opts: type: “nfs” o: “addr=NFSSERVERIP,nolock,soft” device: “:NFSPATH”

cleanedaudiobooks: driver_opts: type: “nfs” o: “addr=NFSSERVERIP,nolock,soft” device: “:OTHER NFSPATH” `

dockge is amazing for people that see the value in a gui but want it to stay the hell out of the way. https://github.com/louislam/dockge lets you use compose without trapping your stuff in stacks like portainer does. You decide you don’t like dockge, you just go back to cli and do your docker compose up -d --force-recreate .

Second this. Portainer + docker compose is so good that now I go out of my way to composerize everything so I don’t have to run docker containers from the cli.

I would suggest docker compose before a UI to someone that likes to work via the command line.

Many popular docker repositories also automatically give docker run equivalents in compose format, so the learning curve is not as steep vs what it was before for learning docker or docker compose commands.

There is even a tool to convert Docker Run commands to a Docker Compose file :)

Such as this one hosted by Opnxng:

https://it.opnxng.com/docker-run-to-docker-compose-converter

If you are interested in a web interface for management check out portainer.

As a guy who’s you before summer.

Can you explain why you think it is better now after you have ‘contained’ all your services? What advantages are there, that I can’t seem to figure out?

Please teach me Mr. OriginalLucifer from the land of MoistCatSweat.Com

No more dependency hell from one package needing

libsomething.so 5.3.1and another service absolutely can only run withlibsomething.so 4.2.0That and knowing that when i remove a container, its not leaving a bunch of cruft behind

Modularity, compartmentalization, reliability, predictability.

One software needs MySQL 5, another needs mariadb 7. A third service needs PHP 7 while the distro supported version is 8. A fourth service uses cuda 11.7 - not 11.8 which is what everything in your package manager uses. a fifth service’s install was only tested on latest Ubuntu, and now you need to figure out what rpm gives the exact library it expects. A sixth service expects odbc to be set up in a very specific way, but handwaves it in the installation docs. A seventh program expects a symlink at a specific place that is on the desktop version of the distro, but not the server version. And then you got that weird program that insist on admin access to the database so it can create it’s own user. Since I don’t trust it with that, let it just have it’s own database server running in docker and good riddance.

And so on and so forth… with docker not only is all this specified in excruciating details, it’s also the exact same setup on every install.

You don’t have it not working on arch because the maintainer of a library there decided to inline a patch that supposedly doesn’t change anything, but somehow causes the program to segfault.

I can develop a service on windows, test it, deploy it to my Kubernetes cluster, and I don’t even have to worry about which machine to deploy it on, it just runs it on a machine. Probably an Ubuntu machine, but maybe on that Gentoo node instead. And if my osx friend wants to try it out, then no problem. I can just give him a command, and it’s running on his laptop. No worries about the right runtime or setting up environment or libraries and all that.

If you’re an old Linux admin… This is what utopia looks like.

Edit: And restarting a container is almost like reinstalling the OS and the program. Since the image is static, restarting the container removes all file system cruft too and starts up a pristine new copy (of course except the specific files and folders you have chosen to save between restarts)

It sounds very nice and clean to work with!

If I’m lucky enough to get the Raspberry 5 at Christmas, I will try to set it up with docker for all my services!

Thanks for the explanation.

Just remember that Raspberry is an ARM cpu, which is a different architecture. Docker can cross compile to it, and make multiple images automatically. It takes more time and space though, as it runs an arm emulator to make them.

https://www.docker.com/blog/faster-multi-platform-builds-dockerfile-cross-compilation-guide/ has some info about it.

You can also back up your compose file and data directories, pull the backup from another computer, and as long as the architecture is compatible you can just restore it with no problem. So basically, your services are a whole lot more portable. I recently did this when dedipath went under. Pulled my latest backup to a new server at virmach, and I was up and running as soon as the DNS propagated.

Learning docker is always a big plus. It’s not hard. If you are comfortable with cli commands, then it should be a breeze. Even if you are not comfortable, you should get used to it very fast.

Definitely not a fad. It’s used all over the industry. It gives you a lot more control over the environment where your hosted apps run. There may be some overhead, but it’s worth it.

It just making things easier and cleaner. When you remove a container, you know there is no leftover except mounted volumes. I like it.

Not completely true you probably have to prune some images, or volumes.

It’s also way easier if you need to migrate to another machine for any reason.

I use LXC for all the reasons most people use Docker, it’s easy to spin up a new service, there are no leftovers when I remove a service, and everything stays separate. What I really like about LXC though is that you can treat containers like VMs, you start it up, attach and install all your software as if it were a real machine. No extra tech to learn.

As someone who is not a former sysadmin and only vaguely familiar with *nix, I’ve been able to turn my home NAS (bought strictly to hold photos and videos backed up from our phones) into a home media sever by installing Docker, learning how the yml files work, how containers network, etc, and it’s been awesome.

I am a network engineer and I am learning it. I see it in the next step from the bare metal -> virtualisation evolution

IMO, yes. Docker (or at least OCI containers) aren’t going anywhere. Though one big warning to start with, as a sysadmin, you’re going to be absolutely aghast at the security practices that most docker tutorials suggest. Just know that it’s really not that hard to do things right (for the most part[1]).

I personally suggest using rootless podman with docker-compose via the podman-system-service.

Podman re-implements the docker cli using the system namespacing (etc.) features directly instead of through a daemon that runs as root. (You can run the docker daemon rootless, but it clearly wasn’t designed for it and it just creates way more headaches.) The Podman System Service re-implements the docker daemon’s UDS API which allows real Docker Compose to run without the docker-daemon.

If anyone can tell me how to set SELinux labels such that both a container and a samba server can have access, I could fix my last remaining major headache. ↩︎

I don’t know if this is what you are looking for but I used :z with podman mounting and it Just Works*.

podman run -d -v /dir:/var/lib/dir:z imageFrom the documentation :z or :Z relabels volumes for host and container usage depending.

Unfortunately, no. Samba needs a different label. Doing that relabels things so that only containers (and anything unrestriced) can access those files.

Docker is amazing, you are late to the party :)

It’s not a fad, it’s old tech now.

Yes. Let me give you an example on why it is very nice: I migrated one of my machines at home from an old x86-64 laptop to an arm64 odroid this week. I had a couple of applications running, 8 or 9 of them, all organized in a docker compose file with all persistent storage volumes mapped to plain folders in a directory. All I had to do was stop the compose setup, copy the folder structure, install docker on the new machine and start the compose setup. There was one minor hickup since I forgot that one of the containers was built locally but since all the other software has arm64 images available under the same name, it just worked. Changed the host IP and done.

One of the very nice things is the portability of containers, as well as the reproducibility (within limits) of the applications, since you divide them into stateless parts (the container) and stateful parts (the volumes), definitely give it a go!

Why not jumping directly to Podman if you want more resilent system from beginning? Just my opinion

Why not? Because I’ve never heard of it until this thread - lots of people mentioning it so obviously I’ll look into it.

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I’ve seen in this thread:

Fewer Letters More Letters DNS Domain Name Service/System Git Popular version control system, primarily for code HTTP Hypertext Transfer Protocol, the Web IP Internet Protocol LXC Linux Containers NAS Network-Attached Storage PIA Private Internet Access brand of VPN Plex Brand of media server package RAID Redundant Array of Independent Disks for mass storage SMTP Simple Mail Transfer Protocol SSD Solid State Drive mass storage SSH Secure Shell for remote terminal access SSL Secure Sockets Layer, for transparent encryption VPN Virtual Private Network VPS Virtual Private Server (opposed to shared hosting) k8s Kubernetes container management package nginx Popular HTTP server

15 acronyms in this thread; the most compressed thread commented on today has 10 acronyms.

[Thread #349 for this sub, first seen 13th Dec 2023, 17:15] [FAQ] [Full list] [Contact] [Source code]

As a casual self-hoster for twenty years, I ran into a consistent pattern: I would install things to try them out and they’d work great at first; but after installing/uninstalling other services, updating libraries, etc, the conflicts would accumulate until I’d eventually give up and re-install the whole system from scratch. And by then I’d have lost track of how I installed things the first time, and have to reconfigure everything by trial and error.

Docker has eliminated that cycle—and once you learn the basics of Docker, most software is easier to install as a container than it is on a bare system. And Docker makes it more consistent to keep track of which ports, local directories, and other local resources each service is using, and of what steps are needed to install or reinstall.

It’s very, very useful.

For one thing, its a ridiculously easy way to get cross-distro support working for whatever it is you’re doing, no matter the distro-specific dependency hell you have to crawl through in order to get it set up.

For another, rather related reason, it’s an easy way to build for specific distros and distro versions, especially in an automated fashion. Don’t have to fuck around with dual booting or VMs, just use a Docker command to fire up the needed image and do what you gotta do.

Cleanup is also ridiculously easy too. Complete uninstallation of a service running in Docker simply involves removal of the image and any containers attached to it.

A couple of security rules you should bear in mind:

- expose only what you need to. If what you’re doing doesn’t need a network port, don’t provide one. The same is true for files on your host OS, RAM, CPU allocation, etc.

- never use privileged mode. Ever. If you need privileged mode, you are doing something wrong. Privileged mode exposes everything and leaves your machine ripe for being compromised, as root if you are using Docker.

- consider podman over docker. The former does not run as root.

I’m gonna play devil’s advocate here.

You should play around with it. But I’ve been a Linux server admin for a long time and — this might be unpopular — I think Docker is unimportant for your situation. I use Docker daily at work and I love it. But I didn’t bother with it for my home server. I’ll never need to scale it or deploy anything repeatedly or where I need 100% uptime.

At home, I tend to try out new things and my old docker-compose files are just not that valuable. Docker is amazing at work where I have different use cases but it mostly just adds needless complexity on a home server.

This is kinda where I’m at as well. I have always run my home services each in their own VM. There’s no fuss to set up a new one, if I want to move it to a different server I just copy the *.img file over and launch it. Sure I run a lot of internet services across my various machines but it all just works so I don’t understand what purpose there would be to converting all the custom configurations over to docker. It might make sense if I was trying to run all my services directly on the bare metal, but who does that?

VM’s have much bigger overhead, for one. And VM’s are less reproducible too. If you had to set up a VM again, do you have all the steps written down? Every single step? Including that small “oh right” thing you always forget? A Dockerfile is basically just a list of those steps, written in a way a computer can follow. And every time you build an image in docker, it just plays that list and gives you the resulting file system ready to run.

It’s incredibly practical in some cases, let’s say you want to try a different library or upgrade a component to a newer version. With VM’s you could do it live, but you risk not being able to go back. You could make a copy or make a checkpoint, but that’s rather resource intensive. With docker you just change the Dockerfile slightly and build a new image.

The resulting image is also immutable, which means that if you restart the docker container, it’s like reverting to first VM checkpoint after finished install, throwing out any cruft that have gathered. You can exempt specific file and folders from this, if needed. So every cruft and change that have happened gets thrown out except the data folder(s) for the program.

I’m not sure I understand this idea that VMs have a high overhead. I just checked one of my servers, there are nine VMs running everything from chat channels to email to web servers, and the server is 99.1% idle. And this is on a poweredge R620 with low-power CPUs, it’s not like I’m running something crazy-fast or even all that new. Hell until the beginning of this year I was running all this stuff on poweredge 860’s which are nearly 20 years old now.

If I needed to set up the VM again, well I would just copy the backup as a starting point, or copy one of the mirror servers. Copying a VM doesn’t take much, I mean even my bigger storage systems only use an 8GB image. That takes, what, 30 seconds? And for building a new service image, I have a nearly stock install which has the basics like LDAP accounts and network shares set up. Otherwise once I get a service configured I just let Debian manage the security updates and do a full upgrade as needed. I’ve never had a reason to try replacing an individual library for anything, and each of my VMs run a single service (http, smtp, dns, etc) so even if I did try that there wouldn’t be any chance of it interfering with anything else.

Honestly from what you’re saying here, it just sounds like docker is made for people who previously ran everything directly under the main server installation and frequently had upgrades of one service breaking another service. I suppose docker works for those people, but the problems you are saying it solves are problems I have never run in to over the last two decades.

Nine. How much ram do they use? How much disk space? Try running 90, or 900. Currently, on my personal hobby kubernetes cluster, there’s 83 different instances running. Because of the low overhead, I can run even small tools in their own container, completely separate from the rest. If I run say… a postgresql server… spinning one up takes 90mb disk space for the image, and about 15 mb ram.

I worked at a company that did - among other things - hosting, and was using VM’s for easier management and separation between customers. I wasn’t directly involved in that part day to day, but was friend with the main guy there. It was tough to manage. He was experimenting with automatic creating and setting up new VM’s, stripping them for unused services and files, and having different sub-scripts for different services. This was way before docker, but already then admins were looking in that direction.

So aschually, docker is kinda made for people who runs things in VM’s, because that is exactly what they were looking for and duct taping things together for before docker came along.

Yeah I can see the advantage if you’re running a huge number of instances. In my case it’s all pretty small scale. At work we only have a single server that runs a web site and database so my home setup puts that to shame, and even so I have a limited number of services I’m working with.

Yeah, it also has the effect that when starting up say a new postgres or web server is one simple command, a few seconds and a few mb of disk and ram, you do it more for smaller stuff.

Instead of setting up one nginx for multiple sites you run one nginx per site and have the settings for that as part of the site repository. Or when a service needs a DB, just start a new one just for that. And if that file analyzer ran in it’s own image instead of being part of the web service, you could scale that separately… oh, and it needs a redis instance and a rabbitmq server, that’s two more containers, that serves just that web service. And so on…

Things that were a huge hassle before, like separate mini vm’s for each sub-service, and unique sub-services for each service doesn’t just become practical but easy. You can define all the services and their relations in one file and docker will recreate the whole stack with all services with one command.

And then it also gets super easy to start more than one of them, for example for testing or if you have a different client. … which is how you easily reach a hundred instances running.

So instead of a service you have a service blueprint, which can be used in service stack blueprints, which allows you to set up complex systems relatively easily. With a granularity that would traditionally be insanity for anything other than huge, serious big-company deployments.

Instead of setting up one nginx for multiple sites you run one nginx per site and have the settings for that as part of the site repository.

Doesn’t that require a lot of resources since you’re running (mysql, nginx, etc.) numerous times (once for each container), instead of once globally?

Or, per your comment below:

Since the base image is static, and config is per container, one image can be used to run multiple containers. So if you have a postgres image, you can run many containers on that image. And specify different config for each instance.

You’d only have two instances of postgres, for example, one for all docker containers and one global/server-wide? Still, that doubles the resources used no?

Well congrats, you are the first person who has finally convinced me that it might actually be worth looking at even for my small setup. Nobody else has been able to even provide a convincing argument that docker might improve on my VM setup, and I’ve been asking about it for a few years now.

That’s exactly how I feel about it. Except (as noted in my post…) the software availability issue. More and more stuff I want is “docker first” and I really have to go out of my way to install and maintain non docker versions. Case in point - I’m trying to evaluate Immich so I can move off Google photos. It looks really nice, but it seems to be effectively “docker only.”

Im probably the opposite of you! Started using docker at home after messing up my raspberry pi a few too many times trying stuff out, and not really knowing what the hell I was doing. Since moved to a proper nas, with (for me, at least) plenty of RAM.

Love the ability to try out a new service, which is kind of self-documenting (especially if I write comments in the docker-compose file). And just get rid of it without leaving any trace if it’s not for me.

Added portainer to be able to check on things from my phone browser, grafana for some pretty metrics and graphs, etc etc etc.

And now at work, it’s becoming really, really useful, and I’m the only person in my (small, scientific research) team who uses containers regularly. While others are struggling to keep their fragile python environments working, I can try out new libraries, take my env to the on-prem HPC or the external cloud, and I don’t lose any time at all. Even “deployed” some little utility scripts for folks who don’t realise that they’re actually pulling my image from the internal registry when they run it. A much, much easier way of getting a little time-saving script into the hands of people who are forced to use Linux but don’t have a clue how to use it.

The advantage of docker, as I see it for home labs, is keeping things tidy, ensuring compatibility, and easy to manage/backup setup configs, app configs, and app data. It is all very predictable and manageable. I can move my docker compose and data from one host to another in literal seconds. I can, likewise, spin up and down test environments in seconds too. Obviously the whole scaling thing that people love containers for is pointless in a homelab, but many of the things that make it scalable also make it easy to manage.

Why would you try avoiding it if you understand how it works? It has so many upsides and so few downsides. About the only practical one is using more disk space. It was groundbreaking technology in 2013. Today it’s an old and essential tool.

Because it seems overkill for a home server. Up until recently all I ran was Samba and a torrent daemon. Why would I install another layer of overhead to manage two applications on one server?

Because the overhead is practically none, barring the extra disk space. Maybe it’s not worth using it for Samba and Transmission. But involve OpenVPN for Transmission in the mix and things get a lot more complicated if Samba has to keep serving LAN and Transmission has to stop whenever OpenVPN stops. If instead you grab this, the problem is solved by writing one 20-line docker-compose.yml and doing

docker-compose up -d:version: '3.3' services: transmission-openvpn: cap_add: - NET_ADMIN volumes: - '/your/storage/path/:/data' - '/your/config/path/:/config' environment: - OPENVPN_PROVIDER=PIA - OPENVPN_CONFIG=france - OPENVPN_USERNAME=user - OPENVPN_PASSWORD=pass - LOCAL_NETWORK=192.168.0.0/16 logging: driver: json-file options: max-size: 10m ports: - '9091:9091' restart: on-failure image: haugene/transmission-openvpnA benefit of Docker’s that helps even with a single-service deployment is the the packaging side. It allows for running near-arbitrary service versions on top of your host OS, stale, stable, bleeding edge or anything in-between.