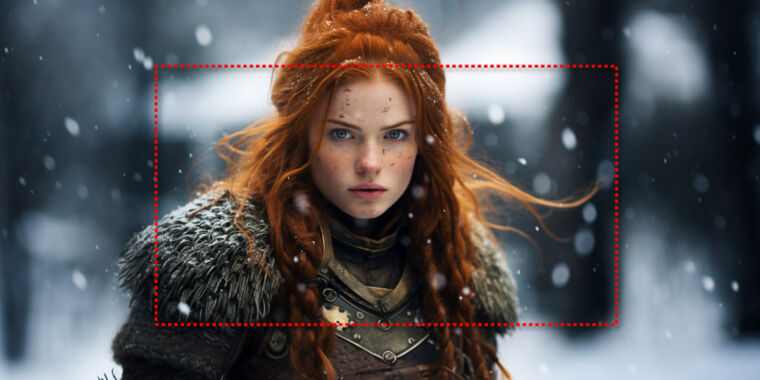

Midjourney v5.2 features camera-like zoom control over framing, more realism.

Imagine where this is going to be in only 10 years. Then imagine where it’ll be in 100.

Is there a Moore-type law for AI I wonder?

The latest season of Black Mirror has an episode that kind of predicts where this sort of thing is heading…

Black Mirror S6E1 Spoiler

A Netflix analog uses a futuristic equivalent of AI to generate shows targeted specifically at individual users. It uses data mined from the user’s devices to get insight into the user’s life, and uses that as the basis for the show.

Yeah Joan is Awful was good.

Thing is though: even AI wouldn’t be able to do that unless there was someone/something actually watching every moment of Joan’s life. Then they could feed that into the AI and be good to go.

But I was also really, REALLY unsober when I watched it, so that might have been addressed and I can’t remember.

The show did address it:

spoiler

It was her phone and “smart” devices; it was implied that some kind of Amazon Echo / Alexa / Siri style service was listening in on her entire life and that that was what was being used to generate the show.

AHHH that’s right, I completely forgot that.

I need to stop being so drunk and stoned when I watch shows lmfao

Hey, that’s alright. Depending on the show, I could see that being a big improvement to the experience!

Is there a Moore-type law for AI I wonder?

Large amount of IT fields follow parabolic or S-shaped curves to their progress. It’s part of the reason technology has advanced so quickly over the past hundred-ish years

I imagine we’ll hit a ceiling soon enough until the next major breakthrough is found. There is also concern about gathering good training data in the future as the internet started to get littered with AI generated contents. I thin I read an article earlier about how a machine learning system perform worse if it’s trained with secondary data generated by another ML system.

deleted by creator

Given the progress in just the last year… that’s terrifying.

How’s that different from outpainting, which has been a thing for a very long time?

Psst. Don’t tell anybody, but the answer to your question is in the second paragraph in the article:

Similar to outpainting—an AI imagery technique introduced by OpenAI’s DALL-E 2 in August 2022—Midjourney’s zoom-out feature can take an existing AI-generated image and expand its borders while keeping its original subject centered in the new image. But unlike DALL-E and Photoshop’s Generative Fill feature, you can’t select a custom image to expand. At the moment, v5.2’s zoom-out only works on images generated within Midjourney, a subscription AI image-generator service.

Haha, you got me :)

For some reason, midjourney is getting more hype than the alternatives, which is especially sad for stable-diffusion being open-source and all.

What a horrible title, this feature is not new in generative AI’s. Even Photoshop has had it for a while now, which means it existed long before it got implemented.