This Lemmy instance is much harder to maintain due to the fact that I can’t tell what images get uploaded here, which means anyone can use this as a free image host for illegal shit, and the fact that there’s no user list that I can easily see. Moderation tools are nonexistent on here.

0.19.4 provides a way to see uploaded images (although not the best) but this version was only recently released so I can see where the frustration is coming from especially since the CSAM attacks happened nearly a year ago. At the time, I had to make a copy of pictrs, view everything on a file manager, and manually remove those images. People can still upload images without anyone seeing it however.

It also eats up storage like crazy due to the fact that it rapidly caches images from scraped URLs and the few remaining instances that we still federate with.

This was fixed in 0.19.3 (released 7 months ago) where you can disable image “caching”. This has solved storage costs for us together with pictrs’ image processing.

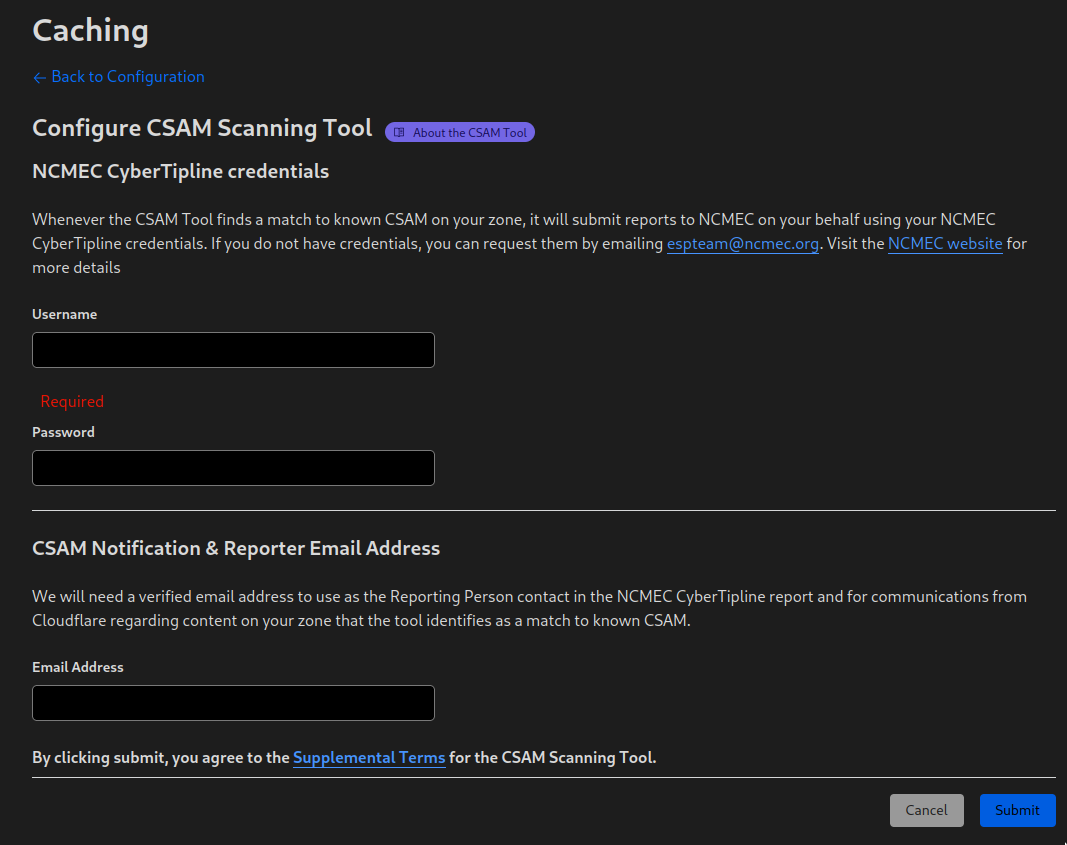

plug in an expensive AI image checker to scan for illegal imagery

It’s unfortunate that we need this. Not everybody has the resources to run fedisafety nor does everyone live in USA where they can use Cloudflare’s CSAM scanner. I think a good way to deal with the issue is to have images that are not public, not be stored (or have no private images at all). This way images can be easily reported.

Overall, I understand the frustration and to some degree I also feel the same but I also limit my expectations considering the nature of the project.

I also really like the tunnels feature. It makes self hosting at home easy for those under NAT/CGNAT or whatever it was called.