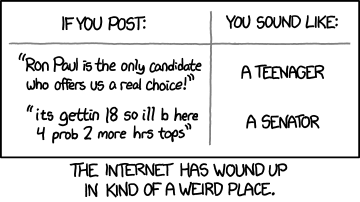

I used to think typos meant that the author (and/or editor) hadn’t checked what they wrote, so the article was likely poor quality and less trustworthy. Now I’m reassured that it’s a human behind it and not a glorified word-prediction algorithm.

That’s a very interesting take my friend

You can easily have an AI include some random typos. Don’t be fooled by them

That’s what the AI wants you to think.

Kind of like how tiny imperfections in products makes us think of handmade products

deleted by creator

Pier 1 thanks you for your business.

Tiny brown hands, most likely

AI that is parsing Lemmy: “Noted.”

deleted by creator

Lmao imagine getting referred to a doctor for surgery, you look them up, and their professional webpage is like. “i wen’t 2 harverd”

They’re not saying they treat the lack of typos as a bad sign, but rather that they treat typos as a good sign. Those are not the same thing.

Think of AI more like human cultural consciousness that we collectively embed into everything we share publicly.

Its a tool that is available for anyone to tap into. The thing you are complaining about is not the AI, it is the results of the person that wrote the code that generated the output. They are leveraging a tool, but it is not the tool that is the problem. This is like blaming Photoshop because a person uses it to make child porn.

Photoshop is a general purpose image editting tool that is mostly harmless. That’s not the same for AI. The people who created them and allow other people to use them do so anyway without enough consideration to the risks they know is much much higher than something like Photoshop.

What you say applies to photoshop because the devs know what it can do and the possible damage it can cause from misuse is within reasons. The AI you are talking about are controlled by the companies that create them and use them to provide services. It follows it is their responsibility to make sure their products are not harmful to the extend they are, especially when the consequences are not fully known.

Your reasoning is the equivalent of saying it’s the kids fault for getting addicted to predatory mobile games and wasting excessive money on them. Except that it’s not entirely their fault and programs aren’t just a neutral tool but a tool that is customised to the wills of the owners (the companies that own them). So there is such a thing as an evil tool.

It’s all those companies, and the people involved, as well as law makers responsiblity to make the new technology safe with minimal negative impacts to society rather than chase after their own profits while ignoring the moral choices.

This is not true. You do not know all the options that exist, or how they really work. I do. I am only using open source offline AI. I do not use anything proprietary. All of the LLM’s are just a combination of a complex system of categories, with a complex network that calculates what word should come next. Everything else is external to the model. The model itself is not anything like an artificial general intelligence. It has no persistent memory. The only thing is actually does is predict what word should come next.

I get where you’re coming from, but isn’t it sort of similar to the “guns don’t kill people, people kill people” argument? At what point is a tool at least partially culpable for the crimes committed with it?

It is extremely easy for ai to insert typos. Just FYI

AI makes typos.

Hell, when we played around with chatGPT code generation it literally misspelled a variable name which broke the code.

A while back, Google trained an AI to learn to speak like a human, and it was making mouth noise and breathing. If AI is trained with human texts, it will 100% insert typos.

Somehow I can pretty easily tell AI by reading what they write. Motivation is what they’re writing for is big, and depends on what they’re saying. Chatgpt and shit won’t go off like a Wikipedia styled description with some extra hallucination in their. Real people will throw in some dumb shit and start arguing with u

I have a janitor.ai character that sounds like an average Redditor, since I just fed it average reddit posts as its personality.

It says stupid shit and makes spelling errors a lot, is incredibly pedantic and contrarian, etc. I don’t know why I made it, but it’s scary how real it is.

what motivation would someone have to randomly run that

also you just added new information to the discussion that you personally did. Can an AI do that?

It is an AI. It’s a frontend for ChatGPT. All I did was coax the AI to behave in a specific way, which anyone else using these tools is capable of doing.

You shouldn’t. Repost bots on Reddit had already figured out how to use misspellings/typos to get past spam filters.